Kubernetes News

-

Introducing Headlamp AI Assistant

This announcement originally appeared on the Headlamp blog.

To simplify Kubernetes management and troubleshooting, we're thrilled to introduce Headlamp AI Assistant: a powerful new plugin for Headlamp that helps you understand and operate your Kubernetes clusters and applications with greater clarity and ease.

Whether you're a seasoned engineer or just getting started, the AI Assistant offers:

- Fast time to value: Ask questions like "Is my application healthy?" or "How can I fix this?" without needing deep Kubernetes knowledge.

- Deep insights: Start with high-level queries and dig deeper with prompts like "List all the problematic pods" or "How can I fix this pod?"

- Focused & relevant: Ask questions in the context of what you're viewing in the UI, such as "What's wrong here?"

- Action-oriented: Let the AI take action for you, like "Restart that deployment", with your permission.

Here is a demo of the AI Assistant in action as it helps troubleshoot an application running with issues in a Kubernetes cluster:

Hopping on the AI train

Large Language Models (LLMs) have transformed not just how we access data but also how we interact with it. The rise of tools like ChatGPT opened a world of possibilities, inspiring a wave of new applications. Asking questions or giving commands in natural language is intuitive, especially for users who aren't deeply technical. Now everyone can quickly ask how to do X or Y, without feeling awkward or having to traverse pages and pages of documentation like before.

Therefore, Headlamp AI Assistant brings a conversational UI to Headlamp, powered by LLMs that Headlamp users can configure with their own API keys. It is available as a Headlamp plugin, making it easy to integrate into your existing setup. Users can enable it by installing the plugin and configuring it with their own LLM API keys, giving them control over which model powers the assistant. Once enabled, the assistant becomes part of the Headlamp UI, ready to respond to contextual queries and perform actions directly from the interface.

Context is everything

As expected, the AI Assistant is focused on helping users with Kubernetes concepts. Yet, while there is a lot of value in responding to Kubernetes related questions from Headlamp's UI, we believe that the great benefit of such an integration is when it can use the context of what the user is experiencing in an application. So, the Headlamp AI Assistant knows what you're currently viewing in Headlamp, and this makes the interaction feel more like working with a human assistant.

For example, if a pod is failing, users can simply ask "What's wrong here?" and the AI Assistant will respond with the root cause, like a missing environment variable or a typo in the image name. Follow-up prompts like "How can I fix this?" allow the AI Assistant to suggest a fix, streamlining what used to take multiple steps into a quick, conversational flow.

Sharing the context from Headlamp is not a trivial task though, so it's something we will keep working on perfecting.

Tools

Context from the UI is helpful, but sometimes additional capabilities are needed. If the user is viewing the pod list and wants to identify problematic deployments, switching views should not be necessary. To address this, the AI Assistant includes support for a Kubernetes tool. This allows asking questions like "Get me all deployments with problems" prompting the assistant to fetch and display relevant data from the current cluster. Likewise, if the user requests an action like "Restart that deployment" after the AI points out what deployment needs restarting, it can also do that. In case of "write" operations, the AI Assistant does check with the user for permission to run them.

AI Plugins

Although the initial version of the AI Assistant is already useful for Kubernetes users, future iterations will expand its capabilities. Currently, the assistant supports only the Kubernetes tool, but further integration with Headlamp plugins is underway. Similarly, we could get richer insights for GitOps via the Flux plugin, monitoring through Prometheus, package management with Helm, and more.

And of course, as the popularity of MCP grows, we are looking into how to integrate it as well, for a more plug-and-play fashion.

Try it out!

We hope this first version of the AI Assistant helps users manage Kubernetes clusters more effectively and assist newcomers in navigating the learning curve. We invite you to try out this early version and give us your feedback. The AI Assistant plugin can be installed from Headlamp's Plugin Catalog in the desktop version, or by using the container image when deploying Headlamp. Stay tuned for the future versions of the Headlamp AI Assistant!

-

Kubernetes v1.34 Sneak Peek

Kubernetes v1.34 is coming at the end of August 2025. This release will not include any removal or deprecation, but it is packed with an impressive number of enhancements. Here are some of the features we are most excited about in this cycle!

Please note that this information reflects the current state of v1.34 development and may change before release.

Featured enhancements of Kubernetes v1.34

The following list highlights some of the notable enhancements likely to be included in the v1.34 release, but is not an exhaustive list of all planned changes. This is not a commitment and the release content is subject to change.

The core of DRA targets stable

Dynamic Resource Allocation (DRA) provides a flexible way to categorize, request, and use devices like GPUs or custom hardware in your Kubernetes cluster.

Since the v1.30 release, DRA has been based around claiming devices using structured parameters that are opaque to the core of Kubernetes. The relevant enhancement proposal, KEP-4381, took inspiration from dynamic provisioning for storage volumes. DRA with structured parameters relies on a set of supporting API kinds: ResourceClaim, DeviceClass, ResourceClaimTemplate, and ResourceSlice API types under

resource.k8s.io, while extending the.specfor Pods with a newresourceClaimsfield. The core of DRA is targeting graduation to stable in Kubernetes v1.34.With DRA, device drivers and cluster admins define device classes that are available for use. Workloads can claim devices from a device class within device requests. Kubernetes allocates matching devices to specific claims and places the corresponding Pods on nodes that can access the allocated devices. This framework provides flexible device filtering using CEL, centralized device categorization, and simplified Pod requests, among other benefits.

Once this feature has graduated, the

resource.k8s.io/v1APIs will be available by default.ServiceAccount tokens for image pull authentication

The ServiceAccount token integration for

kubeletcredential providers is likely to reach beta and be enabled by default in Kubernetes v1.34. This allows thekubeletto use these tokens when pulling container images from registries that require authentication.That support already exists as alpha, and is tracked as part of KEP-4412.

The existing alpha integration allows the

kubeletto use short-lived, automatically rotated ServiceAccount tokens (that follow OIDC-compliant semantics) to authenticate to a container image registry. Each token is scoped to one associated Pod; the overall mechanism replaces the need for long-lived image pull Secrets.Adopting this new approach reduces security risks, supports workload-level identity, and helps cut operational overhead. It brings image pull authentication closer to modern, identity-aware good practice.

Pod replacement policy for Deployments

After a change to a Deployment, terminating pods may stay up for a considerable amount of time and may consume additional resources. As part of KEP-3973, the

.spec.podReplacementPolicyfield will be introduced (as alpha) for Deployments.If your cluster has the feature enabled, you'll be able to select one of two policies:

TerminationStarted- Creates new pods as soon as old ones start terminating, resulting in faster rollouts at the cost of potentially higher resource consumption.

TerminationComplete- Waits until old pods fully terminate before creating new ones, resulting in slower rollouts but ensuring controlled resource consumption.

This feature makes Deployment behavior more predictable by letting you choose when new pods should be created during updates or scaling. It's beneficial when working in clusters with tight resource constraints or with workloads with long termination periods.

It's expected to be available as an alpha feature and can be enabled using the

DeploymentPodReplacementPolicyandDeploymentReplicaSetTerminatingReplicasfeature gates in the API server and kube-controller-manager.Production-ready tracing for

kubeletand API ServerTo address the longstanding challenge of debugging node-level issues by correlating disconnected logs, KEP-2831 provides deep, contextual insights into the

kubelet.This feature instruments critical

kubeletoperations, particularly its gRPC calls to the Container Runtime Interface (CRI), using the vendor-agnostic OpenTelemetry standard. It allows operators to visualize the entire lifecycle of events (for example: a Pod startup) to pinpoint sources of latency and errors. Its most powerful aspect is the propagation of trace context; thekubeletpasses a trace ID with its requests to the container runtime, enabling runtimes to link their own spans.This effort is complemented by a parallel enhancement, KEP-647, which brings the same tracing capabilities to the Kubernetes API server. Together, these enhancements provide a more unified, end-to-end view of events, simplifying the process of pinpointing latency and errors from the control plane down to the node. These features have matured through the official Kubernetes release process. KEP-2831 was introduced as an alpha feature in v1.25, while KEP-647 debuted as alpha in v1.22. Both enhancements were promoted to beta together in the v1.27 release. Looking forward, Kubelet Tracing (KEP-2831) and API Server Tracing (KEP-647) are now targeting graduation to stable in the upcoming v1.34 release.

PreferSameZoneandPreferSameNodetraffic distribution for ServicesThe

spec.trafficDistributionfield within a Kubernetes Service allows users to express preferences for how traffic should be routed to Service endpoints.KEP-3015 deprecates

PreferCloseand introduces two additional values:PreferSameZoneandPreferSameNode.PreferSameZoneis equivalent to the currentPreferClose.PreferSameNodeprioritizes sending traffic to endpoints on the same node as the client.This feature was introduced in v1.33 behind the

PreferSameTrafficDistributionfeature gate. It is targeting graduation to beta in v1.34 with its feature gate enabled by default.Support for KYAML: a Kubernetes dialect of YAML

KYAML aims to be a safer and less ambiguous YAML subset, and was designed specifically for Kubernetes. Whatever version of Kubernetes you use, you'll be able use KYAML for writing manifests and/or Helm charts. You can write KYAML and pass it as an input to any version of

kubectl, because all KYAML files are also valid as YAML. With kubectl v1.34, we expect you'll also be able to request KYAML output fromkubectl(as inkubectl get -o kyaml …). If you prefer, you can still request the output in JSON or YAML format.KYAML addresses specific challenges with both YAML and JSON. YAML's significant whitespace requires careful attention to indentation and nesting, while its optional string-quoting can lead to unexpected type coercion (for example: "The Norway Bug"). Meanwhile, JSON lacks comment support and has strict requirements for trailing commas and quoted keys.

KEP-5295 introduces KYAML, which tries to address the most significant problems by:

-

Always double-quoting value strings

-

Leaving keys unquoted unless they are potentially ambiguous

-

Always using

{}for mappings (associative arrays) -

Always using

[]for lists

This might sound a lot like JSON, because it is! But unlike JSON, KYAML supports comments, allows trailing commas, and doesn't require quoted keys.

We're hoping to see KYAML introduced as a new output format for

kubectlv1.34. As with all these features, none of these changes are 100% confirmed; watch this space!As a format, KYAML is and will remain a strict subset of YAML, ensuring that any compliant YAML parser can parse KYAML documents. Kubernetes does not require you to provide input specifically formatted as KYAML, and we have no plans to change that.

Fine-grained autoscaling control with HPA configurable tolerance

KEP-4951 introduces a new feature that allows users to configure autoscaling tolerance on a per-HPA basis, overriding the default cluster-wide 10% tolerance setting that often proves too coarse-grained for diverse workloads. The enhancement adds an optional

tolerancefield to the HPA'sspec.behavior.scaleUpandspec.behavior.scaleDownsections, enabling different tolerance values for scale-up and scale-down operations, which is particularly valuable since scale-up responsiveness is typically more critical than scale-down speed for handling traffic surges.Released as alpha in Kubernetes v1.33 behind the

HPAConfigurableTolerancefeature gate, this feature is expected to graduate to beta in v1.34. This improvement helps to address scaling challenges with large deployments, where for scaling in, a 10% tolerance might mean leaving hundreds of unnecessary Pods running. Using the new, more flexible approach would enable workload-specific optimization for both responsive and conservative scaling behaviors.Want to know more?

New features and deprecations are also announced in the Kubernetes release notes. We will formally announce what's new in Kubernetes v1.34 as part of the CHANGELOG for that release.

The Kubernetes v1.34 release is planned for Wednesday 27th August 2025. Stay tuned for updates!

Get involved

The simplest way to get involved with Kubernetes is to join one of the many Special Interest Groups (SIGs) that align with your interests. Have something you'd like to broadcast to the Kubernetes community? Share your voice at our weekly community meeting, and through the channels below. Thank you for your continued feedback and support.

- Follow us on Bluesky @kubernetes.io for the latest updates

- Join the community discussion on Discuss

- Join the community on Slack

- Post questions (or answer questions) on Server Fault or Stack Overflow

- Share your Kubernetes story

- Read more about what's happening with Kubernetes on the blog

- Learn more about the Kubernetes Release Team

-

Post-Quantum Cryptography in Kubernetes

The world of cryptography is on the cusp of a major shift with the advent of quantum computing. While powerful quantum computers are still largely theoretical for many applications, their potential to break current cryptographic standards is a serious concern, especially for long-lived systems. This is where Post-Quantum Cryptography (PQC) comes in. In this article, I'll dive into what PQC means for TLS and, more specifically, for the Kubernetes ecosystem. I'll explain what the (suprising) state of PQC in Kubernetes is and what the implications are for current and future clusters.

What is Post-Quantum Cryptography

Post-Quantum Cryptography refers to cryptographic algorithms that are thought to be secure against attacks by both classical and quantum computers. The primary concern is that quantum computers, using algorithms like Shor's Algorithm, could efficiently break widely used public-key cryptosystems such as RSA and Elliptic Curve Cryptography (ECC), which underpin much of today's secure communication, including TLS. The industry is actively working on standardizing and adopting PQC algorithms. One of the first to be standardized by NIST is the Module-Lattice Key Encapsulation Mechanism (

ML-KEM), formerly known as Kyber, and now standardized as FIPS-203 (PDF download).It is difficult to predict when quantum computers will be able to break classical algorithms. However, it is clear that we need to start migrating to PQC algorithms now, as the next section shows. To get a feeling for the predicted timeline we can look at a NIST report covering the transition to post-quantum cryptography standards. It declares that system with classical crypto should be deprecated after 2030 and disallowed after 2035.

Key exchange vs. digital signatures: different needs, different timelines

In TLS, there are two main cryptographic operations we need to secure:

Key Exchange: This is how the client and server agree on a shared secret to encrypt their communication. If an attacker records encrypted traffic today, they could decrypt it in the future, if they gain access to a quantum computer capable of breaking the key exchange. This makes migrating KEMs to PQC an immediate priority.

Digital Signatures: These are primarily used to authenticate the server (and sometimes the client) via certificates. The authenticity of a server is verified at the time of connection. While important, the risk of an attack today is much lower, because the decision of trusting a server cannot be abused after the fact. Additionally, current PQC signature schemes often come with significant computational overhead and larger key/signature sizes compared to their classical counterparts.

Another significant hurdle in the migration to PQ certificates is the upgrade of root certificates. These certificates have long validity periods and are installed in many devices and operating systems as trust anchors.

Given these differences, the focus for immediate PQC adoption in TLS has been on hybrid key exchange mechanisms. These combine a classical algorithm (such as Elliptic Curve Diffie-Hellman Ephemeral (ECDHE)) with a PQC algorithm (such as

ML-KEM). The resulting shared secret is secure as long as at least one of the component algorithms remains unbroken. TheX25519MLKEM768hybrid scheme is the most widely supported one.State of PQC key exchange mechanisms (KEMs) today

Support for PQC KEMs is rapidly improving across the ecosystem.

Go: The Go standard library's

crypto/tlspackage introduced support forX25519MLKEM768in version 1.24 (released February 2025). Crucially, it's enabled by default when there is no explicit configuration, i.e.,Config.CurvePreferencesisnil.Browsers & OpenSSL: Major browsers like Chrome (version 131, November 2024) and Firefox (version 135, February 2025), as well as OpenSSL (version 3.5.0, April 2025), have also added support for the

ML-KEMbased hybrid scheme.Apple is also rolling out support for

X25519MLKEM768in version 26 of their operating systems. Given the proliferation of Apple devices, this will have a significant impact on the global PQC adoption.For a more detailed overview of the state of PQC in the wider industry, see this blog post by Cloudflare.

Post-quantum KEMs in Kubernetes: an unexpected arrival

So, what does this mean for Kubernetes? Kubernetes components, including the API server and kubelet, are built with Go.

As of Kubernetes v1.33, released in April 2025, the project uses Go 1.24. A quick check of the Kubernetes codebase reveals that

Config.CurvePreferencesis not explicitly set. This leads to a fascinating conclusion: Kubernetes v1.33, by virtue of using Go 1.24, supports hybrid post-quantumX25519MLKEM768for TLS connections by default!You can test this yourself. If you set up a Minikube cluster running Kubernetes v1.33.0, you can connect to the API server using a recent OpenSSL client:

$ minikube start --kubernetes-version=v1.33.0 $ kubectl cluster-info Kubernetes control plane is running at https://127.0.0.1:<PORT> $ kubectl config view --minify --raw -o jsonpath=\'{.clusters[0].cluster.certificate-authority-data}\' | base64 -d > ca.crt $ openssl version OpenSSL 3.5.0 8 Apr 2025 (Library: OpenSSL 3.5.0 8 Apr 2025) $ echo -n "Q" | openssl s_client -connect 127.0.0.1:<PORT> -CAfile ca.crt [...] Negotiated TLS1.3 group: X25519MLKEM768 [...] DONELo and behold, the negotiated group is

X25519MLKEM768! This is a significant step towards making Kubernetes quantum-safe, seemingly without a major announcement or dedicated KEP (Kubernetes Enhancement Proposal).The Go version mismatch pitfall

An interesting wrinkle emerged with Go versions 1.23 and 1.24. Go 1.23 included experimental support for a draft version of

ML-KEM, identified asX25519Kyber768Draft00. This was also enabled by default ifConfig.CurvePreferenceswasnil. Kubernetes v1.32 used Go 1.23. However, Go 1.24 removed the draft support and replaced it with the standardized versionX25519MLKEM768.What happens if a client and server are using mismatched Go versions (one on 1.23, the other on 1.24)? They won't have a common PQC KEM to negotiate, and the handshake will fall back to classical ECC curves (e.g.,

X25519). How could this happen in practice?Consider a scenario:

A Kubernetes cluster is running v1.32 (using Go 1.23 and thus

X25519Kyber768Draft00). A developer upgrades theirkubectlto v1.33, compiled with Go 1.24, only supportingX25519MLKEM768. Now, whenkubectlcommunicates with the v1.32 API server, they no longer share a common PQC algorithm. The connection will downgrade to classical cryptography, silently losing the PQC protection that has been in place. This highlights the importance of understanding the implications of Go version upgrades, and the details of the TLS stack.Limitations: packet size

One practical consideration with

ML-KEMis the size of its public keys with encoded key sizes of around 1.2 kilobytes forML-KEM-768. This can cause the initial TLSClientHellomessage not to fit inside a single TCP/IP packet, given the typical networking constraints (most commonly, the standard Ethernet frame size limit of 1500 bytes). Some TLS libraries or network appliances might not handle this gracefully, assuming the Client Hello always fits in one packet. This issue has been observed in some Kubernetes-related projects and networking components, potentially leading to connection failures when PQC KEMs are used. More details can be found at tldr.fail.State of Post-Quantum Signatures

While KEMs are seeing broader adoption, PQC digital signatures are further behind in terms of widespread integration into standard toolchains. NIST has published standards for PQC signatures, such as

ML-DSA(FIPS-204) andSLH-DSA(FIPS-205). However, implementing these in a way that's broadly usable (e.g., for PQC Certificate Authorities) presents challenges:Larger Keys and Signatures: PQC signature schemes often have significantly larger public keys and signature sizes compared to classical algorithms like Ed25519 or RSA. For instance, Dilithium2 keys can be 30 times larger than Ed25519 keys, and certificates can be 12 times larger.

Performance: Signing and verification operations can be substantially slower. While some algorithms are on par with classical algorithms, others may have a much higher overhead, sometimes on the order of 10x to 1000x worse performance. To improve this situation, NIST is running a second round of standardization for PQC signatures.

Toolchain Support: Mainstream TLS libraries and CA software do not yet have mature, built-in support for these new signature algorithms. The Go team, for example, has indicated that

ML-DSAsupport is a high priority, but the soonest it might appear in the standard library is Go 1.26 (as of May 2025).Cloudflare's CIRCL (Cloudflare Interoperable Reusable Cryptographic Library) library implements some PQC signature schemes like variants of Dilithium, and they maintain a fork of Go (cfgo) that integrates CIRCL. Using

cfgo, it's possible to experiment with generating certificates signed with PQC algorithms like Ed25519-Dilithium2. However, this requires using a custom Go toolchain and is not yet part of the mainstream Kubernetes or Go distributions.Conclusion

The journey to a post-quantum secure Kubernetes is underway, and perhaps further along than many realize, thanks to the proactive adoption of

ML-KEMin Go. With Kubernetes v1.33, users are already benefiting from hybrid post-quantum key exchange in many TLS connections by default.However, awareness of potential pitfalls, such as Go version mismatches leading to downgrades and issues with Client Hello packet sizes, is crucial. While PQC for KEMs is becoming a reality, PQC for digital signatures and certificate hierarchies is still in earlier stages of development and adoption for mainstream use. As Kubernetes maintainers and contributors, staying informed about these developments will be key to ensuring the long-term security of the platform.

-

Navigating Failures in Pods With Devices

Kubernetes is the de facto standard for container orchestration, but when it comes to handling specialized hardware like GPUs and other accelerators, things get a bit complicated. This blog post dives into the challenges of managing failure modes when operating pods with devices in Kubernetes, based on insights from Sergey Kanzhelev and Mrunal Patel's talk at KubeCon NA 2024. You can follow the links to slides and recording.

The AI/ML boom and its impact on Kubernetes

The rise of AI/ML workloads has brought new challenges to Kubernetes. These workloads often rely heavily on specialized hardware, and any device failure can significantly impact performance and lead to frustrating interruptions. As highlighted in the 2024 Llama paper, hardware issues, particularly GPU failures, are a major cause of disruption in AI/ML training. You can also learn how much effort NVIDIA spends on handling devices failures and maintenance in the KubeCon talk by Ryan Hallisey and Piotr Prokop All-Your-GPUs-Are-Belong-to-Us: An Inside Look at NVIDIA's Self-Healing GeForce NOW Infrastructure (recording) as they see 19 remediation requests per 1000 nodes a day! We also see data centers offering spot consumption models and overcommit on power, making device failures commonplace and a part of the business model.

However, Kubernetes’s view on resources is still very static. The resource is either there or not. And if it is there, the assumption is that it will stay there fully functional - Kubernetes lacks good support for handling full or partial hardware failures. These long-existing assumptions combined with the overall complexity of a setup lead to a variety of failure modes, which we discuss here.

Understanding AI/ML workloads

Generally, all AI/ML workloads require specialized hardware, have challenging scheduling requirements, and are expensive when idle. AI/ML workloads typically fall into two categories - training and inference. Here is an oversimplified view of those categories’ characteristics, which are different from traditional workloads like web services:

- Training

- These workloads are resource-intensive, often consuming entire machines and running as gangs of pods. Training jobs are usually "run to completion" - but that could be days, weeks or even months. Any failure in a single pod can necessitate restarting the entire step across all the pods.

- Inference

- These workloads are usually long-running or run indefinitely, and can be small enough to consume a subset of a Node’s devices or large enough to span multiple nodes. They often require downloading huge files with the model weights.

These workload types specifically break many past assumptions:

Before Now Can get a better CPU and the app will work faster. Require a specific device (or class of devices) to run. When something doesn’t work, just recreate it. Allocation or reallocation is expensive. Any node will work. No need to coordinate between Pods. Scheduled in a special way - devices often connected in a cross-node topology. Each Pod can be plug-and-play replaced if failed. Pods are a part of a larger task. Lifecycle of an entire task depends on each Pod. Container images are slim and easily available. Container images may be so big that they require special handling. Long initialization can be offset by slow rollout. Initialization may be long and should be optimized, sometimes across many Pods together. Compute nodes are commoditized and relatively inexpensive, so some idle time is acceptable. Nodes with specialized hardware can be an order of magnitude more expensive than those without, so idle time is very wasteful. The existing failure model was relying on old assumptions. It may still work for the new workload types, but it has limited knowledge about devices and is very expensive for them. In some cases, even prohibitively expensive. You will see more examples later in this article.

Why Kubernetes still reigns supreme

This article is not going deeper into the question: why not start fresh for

AI/ML workloads since they are so different from the traditional Kubernetes workloads. Despite many challenges, Kubernetes remains the platform of choice for AI/ML workloads. Its maturity, security, and rich ecosystem of tools make it a compelling option. While alternatives exist, they often lack the years of development and refinement that Kubernetes offers. And the Kubernetes developers are actively addressing the gaps identified in this article and beyond.The current state of device failure handling

This section outlines different failure modes and the best practices and DIY (Do-It-Yourself) solutions used today. The next session will describe a roadmap of improving things for those failure modes.

Failure modes: K8s infrastructure

In order to understand the failures related to the Kubernetes infrastructure, you need to understand how many moving parts are involved in scheduling a Pod on the node. The sequence of events when the Pod is scheduled in the Node is as follows:

- Device plugin is scheduled on the Node

- Device plugin is registered with the kubelet via local gRPC

- Kubelet uses device plugin to watch for devices and updates capacity of the node

- Scheduler places a user Pod on a Node based on the updated capacity

- Kubelet asks Device plugin to Allocate devices for a User Pod

- Kubelet creates a User Pod with the allocated devices attached to it

This diagram shows some of those actors involved:

As there are so many actors interconnected, every one of them and every connection may experience interruptions. This leads to many exceptional situations that are often considered failures, and may cause serious workload interruptions:

- Pods failing admission at various stages of its lifecycle

- Pods unable to run on perfectly fine hardware

- Scheduling taking unexpectedly long time

The goal for Kubernetes is to make the interruption between these components as reliable as possible. Kubelet already implements retries, grace periods, and other techniques to improve it. The roadmap section goes into details on other edge cases that the Kubernetes project tracks. However, all these improvements only work when these best practices are followed:

- Configure and restart kubelet and the container runtime (such as containerd or CRI-O) as early as possible to not interrupt the workload.

- Monitor device plugin health and carefully plan for upgrades.

- Do not overload the node with less-important workloads to prevent interruption of device plugin and other components.

- Configure user pods tolerations to handle node readiness flakes.

- Configure and code graceful termination logic carefully to not block devices for too long.

Another class of Kubernetes infra-related issues is driver-related. With traditional resources like CPU and memory, no compatibility checks between the application and hardware were needed. With special devices like hardware accelerators, there are new failure modes. Device drivers installed on the node:

- Must match the hardware

- Be compatible with an app

- Must work with other drivers (like nccl, etc.)

Best practices for handling driver versions:

- Monitor driver installer health

- Plan upgrades of infrastructure and Pods to match the version

- Have canary deployments whenever possible

Following the best practices in this section and using device plugins and device driver installers from trusted and reliable sources generally eliminate this class of failures. Kubernetes is tracking work to make this space even better.

Failure modes: device failed

There is very little handling of device failure in Kubernetes today. Device plugins report the device failure only by changing the count of allocatable devices. And Kubernetes relies on standard mechanisms like liveness probes or container failures to allow Pods to communicate the failure condition to the kubelet. However, Kubernetes does not correlate device failures with container crashes and does not offer any mitigation beyond restarting the container while being attached to the same device.

This is why many plugins and DIY solutions exist to handle device failures based on various signals.

Health controller

In many cases a failed device will result in unrecoverable and very expensive nodes doing nothing. A simple DIY solution is a node health controller. The controller could compare the device allocatable count with the capacity and if the capacity is greater, it starts a timer. Once the timer reaches a threshold, the health controller kills and recreates a node.

There are problems with the health controller approach:

- Root cause of the device failure is typically not known

- The controller is not workload aware

- Failed device might not be in use and you want to keep other devices running

- The detection may be too slow as it is very generic

- The node may be part of a bigger set of nodes and simply cannot be deleted in isolation without other nodes

There are variations of the health controller solving some of the problems above. The overall theme here though is that to best handle failed devices, you need customized handling for the specific workload. Kubernetes doesn’t yet offer enough abstraction to express how critical the device is for a node, for the cluster, and for the Pod it is assigned to.

Pod failure policy

Another DIY approach for device failure handling is a per-pod reaction on a failed device. This approach is applicable for training workloads that are implemented as Jobs.

Pod can define special error codes for device failures. For example, whenever unexpected device behavior is encountered, Pod exits with a special exit code. Then the Pod failure policy can handle the device failure in a special way. Read more on Handling retriable and non-retriable pod failures with Pod failure policy

There are some problems with the Pod failure policy approach for Jobs:

- There is no well-known device failed condition, so this approach does not work for the generic Pod case

- Error codes must be coded carefully and in some cases are hard to guarantee.

- Only works with Jobs with

restartPolicy: Never, due to the limitation of a pod failure policy feature.

So, this solution has limited applicability.

Custom pod watcher

A little more generic approach is to implement the Pod watcher as a DIY solution or use some third party tools offering this functionality. The pod watcher is most often used to handle device failures for inference workloads.

Since Kubernetes just keeps a pod assigned to a device, even if the device is reportedly unhealthy, the idea is to detect this situation with the pod watcher and apply some remediation. It often involves obtaining device health status and its mapping to the Pod using Pod Resources API on the node. If a device fails, it can then delete the attached Pod as a remediation. The replica set will handle the Pod recreation on a healthy device.

The other reasons to implement this watcher:

- Without it, the Pod will keep being assigned to the failed device forever.

- There is no descheduling for a pod with

restartPolicy=Always. - There are no built-in controllers that delete Pods in CrashLoopBackoff.

Problems with the custom pod watcher:

- The signal for the pod watcher is expensive to get, and involves some privileged actions.

- It is a custom solution and it assumes the importance of a device for a Pod.

- The pod watcher relies on external controllers to reschedule a Pod.

There are more variations of DIY solutions for handling device failures or upcoming maintenance. Overall, Kubernetes has enough extension points to implement these solutions. However, some extension points require higher privilege than users may be comfortable with or are too disruptive. The roadmap section goes into more details on specific improvements in handling the device failures.

Failure modes: container code failed

When the container code fails or something bad happens with it, like out of memory conditions, Kubernetes knows how to handle those cases. There is either the restart of a container, or a crash of a Pod if it has

restartPolicy: Neverand scheduling it on another node. Kubernetes has limited expressiveness on what is a failure (for example, non-zero exit code or liveness probe failure) and how to react on such a failure (mostly either Always restart or immediately fail the Pod).This level of expressiveness is often not enough for the complicated AI/ML workloads. AI/ML pods are better rescheduled locally or even in-place as that would save on image pulling time and device allocation. AI/ML pods are often interconnected and need to be restarted together. This adds another level of complexity and optimizing it often brings major savings in running AI/ML workloads.

There are various DIY solutions to handle Pod failures orchestration. The most typical one is to wrap a main executable in a container by some orchestrator. And this orchestrator will be able to restart the main executable whenever the job needs to be restarted because some other pod has failed.

Solutions like this are very fragile and elaborate. They are often worth the money saved comparing to a regular JobSet delete/recreate cycle when used in large training jobs. Making these solutions less fragile and more streamlined by developing new hooks and extension points in Kubernetes will make it easy to apply to smaller jobs, benefiting everybody.

Failure modes: device degradation

Not all device failures are terminal for the overall workload or batch job. As the hardware stack gets more and more complex, misconfiguration on one of the hardware stack layers, or driver failures, may result in devices that are functional, but lagging on performance. One device that is lagging behind can slow down the whole training job.

We see reports of such cases more and more often. Kubernetes has no way to express this type of failures today and since it is the newest type of failure mode, there is not much of a best practice offered by hardware vendors for detection and third party tooling for remediation of these situations.

Typically, these failures are detected based on observed workload characteristics. For example, the expected speed of AI/ML training steps on particular hardware. Remediation for those issues is highly depend on a workload needs.

Roadmap

As outlined in a section above, Kubernetes offers a lot of extension points which are used to implement various DIY solutions. The space of AI/ML is developing very fast, with changing requirements and usage patterns. SIG Node is taking a measured approach of enabling more extension points to implement the workload-specific scenarios over introduction of new semantics to support specific scenarios. This means prioritizing making information about failures readily available over implementing automatic remediations for those failures that might only be suitable for a subset of workloads.

This approach ensures there are no drastic changes for workload handling which may break existing, well-oiled DIY solutions or experiences with the existing more traditional workloads.

Many error handling techniques used today work for AI/ML, but are very expensive. SIG Node will invest in extension points to make those cheaper, with the understanding that the price cutting for AI/ML is critical.

The following is the set of specific investments we envision for various failure modes.

Roadmap for failure modes: K8s infrastructure

The area of Kubernetes infrastructure is the easiest to understand and very important to make right for the upcoming transition from Device Plugins to DRA. SIG Node is tracking many work items in this area, most notably the following:

- integrate kubelet with the systemd watchdog · Issue #127460

- DRA: detect stale DRA plugin sockets · Issue #128696

- Support takeover for devicemanager/device-plugin · Issue #127803

- Kubelet plugin registration reliability · Issue #127457

- Recreate the Device Manager gRPC server if failed · Issue #128167

- Retry pod admission on device plugin grpc failures · Issue #128043

Basically, every interaction of Kubernetes components must be reliable via either the kubelet improvements or the best practices in plugins development and deployment.

Roadmap for failure modes: device failed

For the device failures some patterns are already emerging in common scenarios that Kubernetes can support. However, the very first step is to make information about failed devices available easier. The very first step here is the work in KEP 4680 (Add Resource Health Status to the Pod Status for Device Plugin and DRA).

Longer term ideas include to be tested:

- Integrate device failures into Pod Failure Policy.

- Node-local retry policies, enabling pod failure policies for Pods with restartPolicy=OnFailure and possibly beyond that.

- Ability to deschedule pod, including with the

restartPolicy: Always, so it can get a new device allocated. - Add device health to the ResourceSlice used to represent devices in DRA, rather than simply withdrawing an unhealthy device from the ResourceSlice.

Roadmap for failure modes: container code failed

The main improvements to handle container code failures for AI/ML workloads are all targeting cheaper error handling and recovery. The cheapness is mostly coming from reuse of pre-allocated resources as much as possible. From reusing the Pods by restarting containers in-place, to node local restart of containers instead of rescheduling whenever possible, to snapshotting support, and re-scheduling prioritizing the same node to save on image pulls.

Consider this scenario: A big training job needs 512 Pods to run. And one of the pods failed. It means that all Pods need to be interrupted and synced up to restart the failed step. The most efficient way to achieve this generally is to reuse as many Pods as possible by restarting them in-place, while replacing the failed pod to clear up the error from it. Like demonstrated in this picture:

It is possible to implement this scenario, but all solutions implementing it are fragile due to lack of certain extension points in Kubernetes. Adding these extension points to implement this scenario is on the Kubernetes roadmap.

Roadmap for failure modes: device degradation

There is very little done in this area - there is no clear detection signal, very limited troubleshooting tooling, and no built-in semantics to express the "degraded" device on Kubernetes. There has been discussion of adding data on device performance or degradation in the ResourceSlice used by DRA to represent devices, but it is not yet clearly defined. There are also projects like node-healthcheck-operator that can be used for some scenarios.

We expect developments in this area from hardware vendors and cloud providers, and we expect to see mostly DIY solutions in the near future. As more users get exposed to AI/ML workloads, this is a space needing feedback on patterns used here.

Join the conversation

The Kubernetes community encourages feedback and participation in shaping the future of device failure handling. Join SIG Node and contribute to the ongoing discussions!

This blog post provides a high-level overview of the challenges and future directions for device failure management in Kubernetes. By addressing these issues, Kubernetes can solidify its position as the leading platform for AI/ML workloads, ensuring resilience and reliability for applications that depend on specialized hardware.

-

Image Compatibility In Cloud Native Environments

In industries where systems must run very reliably and meet strict performance criteria such as telecommunication, high-performance or AI computing, containerized applications often need specific operating system configuration or hardware presence. It is common practice to require the use of specific versions of the kernel, its configuration, device drivers, or system components. Despite the existence of the Open Container Initiative (OCI), a governing community to define standards and specifications for container images, there has been a gap in expression of such compatibility requirements. The need to address this issue has led to different proposals and, ultimately, an implementation in Kubernetes' Node Feature Discovery (NFD).

NFD is an open source Kubernetes project that automatically detects and reports hardware and system features of cluster nodes. This information helps users to schedule workloads on nodes that meet specific system requirements, which is especially useful for applications with strict hardware or operating system dependencies.

The need for image compatibility specification

Dependencies between containers and host OS

A container image is built on a base image, which provides a minimal runtime environment, often a stripped-down Linux userland, completely empty or distroless. When an application requires certain features from the host OS, compatibility issues arise. These dependencies can manifest in several ways:

- Drivers: Host driver versions must match the supported range of a library version inside the container to avoid compatibility problems. Examples include GPUs and network drivers.

- Libraries or Software: The container must come with a specific version or range of versions for a library or software to run optimally in the environment. Examples from high performance computing are MPI, EFA, or Infiniband.

- Kernel Modules or Features: Specific kernel features or modules must be present. Examples include having support of write protected huge page faults, or the presence of VFIO

- And more…

While containers in Kubernetes are the most likely unit of abstraction for these needs, the definition of compatibility can extend further to include other container technologies such as Singularity and other OCI artifacts such as binaries from a spack binary cache.

Multi-cloud and hybrid cloud challenges

Containerized applications are deployed across various Kubernetes distributions and cloud providers, where different host operating systems introduce compatibility challenges. Often those have to be pre-configured before workload deployment or are immutable. For instance, different cloud providers will include different operating systems like:

- RHCOS/RHEL

- Photon OS

- Amazon Linux 2

- Container-Optimized OS

- Azure Linux OS

- And more...

Each OS comes with unique kernel versions, configurations, and drivers, making compatibility a non-trivial issue for applications requiring specific features. It must be possible to quickly assess a container for its suitability to run on any specific environment.

Image compatibility initiative

An effort was made within the Open Containers Initiative Image Compatibility working group to introduce a standard for image compatibility metadata. A specification for compatibility would allow container authors to declare required host OS features, making compatibility requirements discoverable and programmable. The specification implemented in Kubernetes Node Feature Discovery is one of the discussed proposals. It aims to:

- Define a structured way to express compatibility in OCI image manifests.

- Support a compatibility specification alongside container images in image registries.

- Allow automated validation of compatibility before scheduling containers.

The concept has since been implemented in the Kubernetes Node Feature Discovery project.

Implementation in Node Feature Discovery

The solution integrates compatibility metadata into Kubernetes via NFD features and the NodeFeatureGroup API. This interface enables the user to match containers to nodes based on exposing features of hardware and software, allowing for intelligent scheduling and workload optimization.

Compatibility specification

The compatibility specification is a structured list of compatibility objects containing Node Feature Groups. These objects define image requirements and facilitate validation against host nodes. The feature requirements are described by using the list of available features from the NFD project. The schema has the following structure:

- version (string) - Specifies the API version.

- compatibilities (array of objects) - List of compatibility sets.

- rules (object) - Specifies NodeFeatureGroup to define image requirements.

- weight (int, optional) - Node affinity weight.

- tag (string, optional) - Categorization tag.

- description (string, optional) - Short description.

An example might look like the following:

version:v1alpha1 compatibilities: - description:"My image requirements" rules: - name:"kernel and cpu" matchFeatures: - feature:kernel.loadedmodule matchExpressions: vfio-pci:{op:Exists} - feature:cpu.model matchExpressions: vendor_id:{op: In, value:["Intel","AMD"]} - name:"one of available nics" matchAny: - matchFeatures: - feature:pci.device matchExpressions: vendor:{op: In, value:["0eee"]} class:{op: In, value:["0200"]} - matchFeatures: - feature:pci.device matchExpressions: vendor:{op: In, value:["0fff"]} class:{op: In, value:["0200"]}Client implementation for node validation

To streamline compatibility validation, we implemented a client tool that allows for node validation based on an image's compatibility artifact. In this workflow, the image author would generate a compatibility artifact that points to the image it describes in a registry via the referrers API. When a need arises to assess the fit of an image to a host, the tool can discover the artifact and verify compatibility of an image to a node before deployment. The client can validate nodes both inside and outside a Kubernetes cluster, extending the utility of the tool beyond the single Kubernetes use case. In the future, image compatibility could play a crucial role in creating specific workload profiles based on image compatibility requirements, aiding in more efficient scheduling. Additionally, it could potentially enable automatic node configuration to some extent, further optimizing resource allocation and ensuring seamless deployment of specialized workloads.

Examples of usage

-

Define image compatibility metadata

A container image can have metadata that describes its requirements based on features discovered from nodes, like kernel modules or CPU models. The previous compatibility specification example in this article exemplified this use case.

-

Attach the artifact to the image

The image compatibility specification is stored as an OCI artifact. You can attach this metadata to your container image using the oras tool. The registry only needs to support OCI artifacts, support for arbitrary types is not required. Keep in mind that the container image and the artifact must be stored in the same registry. Use the following command to attach the artifact to the image:

oras attach \ --artifact-type application/vnd.nfd.image-compatibility.v1alpha1 <image-url> \ <path-to-spec>.yaml:application/vnd.nfd.image-compatibility.spec.v1alpha1+yaml -

Validate image compatibility

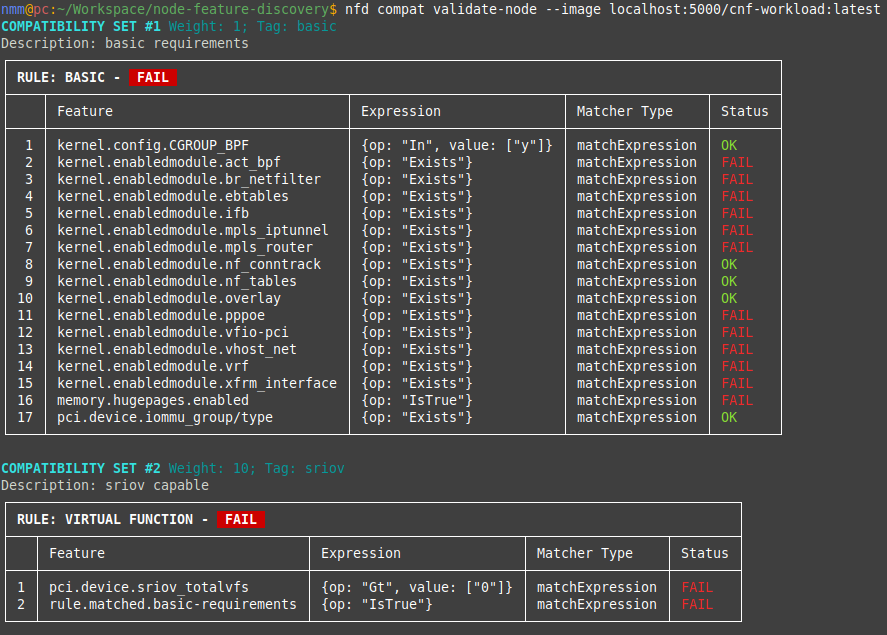

After attaching the compatibility specification, you can validate whether a node meets the image's requirements. This validation can be done using the nfd client:

nfd compat validate-node --image <image-url> -

Read the output from the client

Finally you can read the report generated by the tool or use your own tools to act based on the generated JSON report.

Conclusion

The addition of image compatibility to Kubernetes through Node Feature Discovery underscores the growing importance of addressing compatibility in cloud native environments. It is only a start, as further work is needed to integrate compatibility into scheduling of workloads within and outside of Kubernetes. However, by integrating this feature into Kubernetes, mission-critical workloads can now define and validate host OS requirements more efficiently. Moving forward, the adoption of compatibility metadata within Kubernetes ecosystems will significantly enhance the reliability and performance of specialized containerized applications, ensuring they meet the stringent requirements of industries like telecommunications, high-performance computing or any environment that requires special hardware or host OS configuration.

Get involved

Join the Kubernetes Node Feature Discovery project if you're interested in getting involved with the design and development of Image Compatibility API and tools. We always welcome new contributors.